OpenAI is making headlines again with its latest viral AI application. ChatGPT: What is it and how does it work?

In years past, everyone has been worried about AI (artificial intelligence) and its impending takeover of the world… but who knew that it would begin with the art and literature industries?

It has been months since OpenAI dominated the internet with its AI image generator Dall-E 2. Thanks to ChatGPT, which is a chatbot made from the company’s GPT-3 technology, OpenAI is back in everyone’s social media feeds.

The name GPT-3 may not be the most catchy, but it’s actually the most well-known AI model for processing language on the internet.

What is GPT-3 and how does it make ChatGPT? What is it able to do, and what in the world is a language processing AI model? Below you will find everything you need to know about OpenAI’s newest viral hit.

What is GPT-3 and ChatGPT?

The GPT-3 (Generative Pretrained Transformer 3) is a state-of-the-art AI model for language processing developed by OpenAI. It is capable of generating human-like text and has a wide range of applications, including language translation, language modelling, and generating text for applications such as chatbots. It is one of the largest and most powerful language processing AI models to date, with 175 billion parameters.

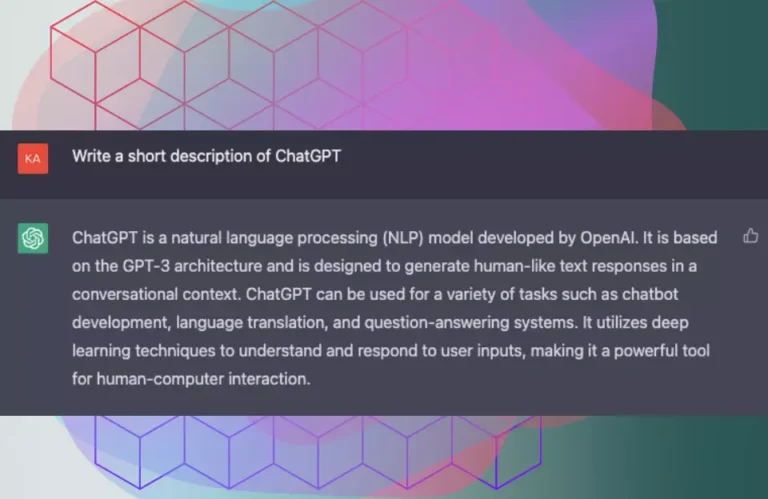

Its most common use so far is creating ChatGPT – a highly capable chatbot. To give you a sample taste of its most basic abilities, we asked GPT-3’s chatbot to write its own description as you can see above. It’s a little bit boastful, but completely accurate and arguably very well written.

In less corporate terms, GPT-3 gives a user the ability to give a trained AI a wide range of worded prompts. These can be questions, requests for a piece of writing on a topic of your choosing or a huge number of other worded requests.

Above, it described itself as a language processing AI model. This simply means it is a program able to process human language both spoken and written, allowing it to interpret the worded information it is fed, and what to return to the user.

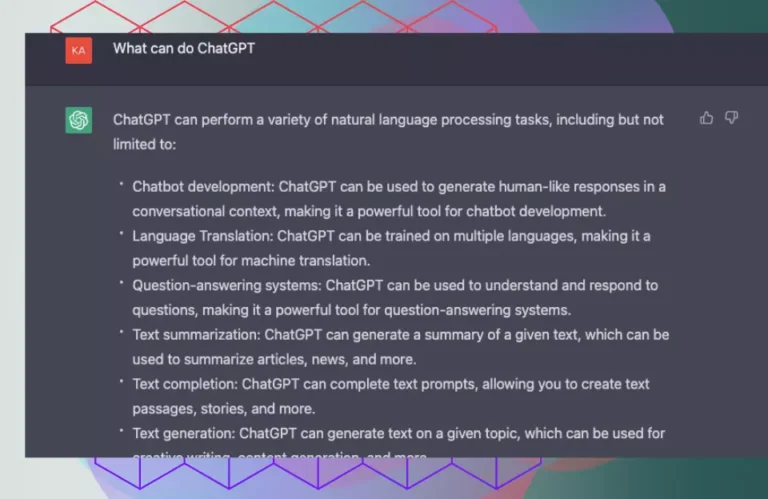

What can it do?

Because GPT-3 consists of 175 billion parameters, it is extremely difficult to narrow down what it does. Language plays an important role in the model, as you might expect. It can’t produce video, sound or images like its brother Dall-E 2, but instead has an in-depth understanding of the spoken and written word.

As a result, it can do anything from write poems about sentient farts and cliché rom-coms, to explain quantum mechanics or write full-length research papers.

While it can be fun to use OpenAI’s years of research to get an AI to write funny stand-up comedy scripts or answer questions about your favourite celebrities, its power lies in its speed and understanding of complicated matters.

Where we could spend hours researching, understanding and writing an article on quantum mechanics, ChatGPT can produce a well-written alternative in seconds.

It has its limitations and its software can be easily confused if your prompt starts to become too complicated. This applies even if you go down a road that becomes a little specialized.

Equally, it can’t deal with concepts that are too recent. Events that occurred in the past year will be met with limited knowledge, and the model may occasionally provide incorrect or confused information.

OpenAI is also very aware of the internet and its love of making AI produce dark, harmful or biased content. ChatGPT will prevent you from asking inappropriate questions or asking for help with dangerous requests just as it did with its Dall-E image generator before.

How does ChatGPT work?

OpenAI trained this model using Reinforcement Learning from Human Feedback (RLHF), using the same methods as InstructGPT, but with slight differences in the data collection setup. We trained an initial model using supervised fine-tuning: human AI trainers provided conversations in which they played both sides—the user and an AI assistant. We gave the trainers access to model-written suggestions to help them compose their responses. We mixed this new dialogue dataset with the InstructGPT dataset, which we transformed into a dialogue format.

To create a reward model for reinforcement learning, we needed to collect comparison data, which consisted of two or more model responses ranked by quality. To collect this data, we took conversations that AI trainers had with the chatbot. We randomly selected a model-written message, sampled several alternative completions, and had AI trainers rank them. Using these reward models, we can fine-tune the model using Proximal Policy Optimization. We performed several iterations of this process.

GPT-3’s technology appears simple at first glance. Your requests, questions, or prompts are quickly answered. There is a lot more technology involved than you might imagine in doing this.

An internet-based text database was used to train the model. This included a whopping 570GB of data obtained from books, web texts, Wikipedia, articles and other pieces of writing on the internet. There were 300 billion words pumped into the system, to be precise

As a language model, it works on probability, being able to guess what the next word should be in a sentence. To get to a stage where it could do this, the model passed through a supervised testing stage.

Here, it was fed inputs, for example “What colour is the wood of a tree?”. The team has an acceptable outcome in mind, but that doesn’t mean it will be flawless. If it gets it wrong, the team inputs the correct answer back into the system, teaching it the correct answers and helping it build its knowledge.

It then proceeds through a second similar stage, offering multiple answers with a member of the team ranking them from best to worst, training the model on comparisons.

What sets this technology apart is that it continues to learn while guessing what the next word should be, constantly improving its understanding of prompts and questions to become the ultimate know-it-all.

Are there any other AI generators?

Although GPT-3 has gained attention due to its language abilities, it is not the only artificial intelligence that is able to do so. In 2014, Google’s LaMDA made headlines after an engineer called it so realistic he believed it was sentient.

In addition, Microsoft, Amazon, Stanford University, and many other companies have developed similar software. Due to the lack of fart jokes or headlines about sentient AI, these have all received a lot less attention than OpenAI or Google.

OpenAI has begun opening up access to GPT-3 during its testing period, and Google’s LaMDA is only available to select groups.

Google’s chatbot can do three things: talk, list, and imagine, and provides examples in each of these areas. You can ask it to visualize a world where snakes rule, or to generate a list of steps to learn how to ride a unicycle. Or just have a chat about the thoughts of dogs.

Where ChatGPT thrives and fails : ChatGPT Limitations

ChatGPT Limitations :

-

ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers. Fixing this issue is challenging, as: (1) during RL training, there’s currently no source of truth; (2) training the model to be more cautious causes it to decline questions that it can answer correctly; and (3) supervised training misleads the model because the ideal answer depends on what the model knows, rather than what the human demonstrator knows.

-

ChatGPT is sensitive to tweaks to the input phrasing or attempting the same prompt multiple times. For example, given one phrasing of a question, the model can claim to not know the answer, but given a slight rephrase, can answer correctly.

-

The model is often excessively verbose and overuses certain phrases, such as restating that it’s a language model trained by OpenAI. These issues arise from biases in the training data (trainers prefer longer answers that look more comprehensive) and well-known over-optimization issues.

-

Ideally, the model would ask clarifying questions when the user provided an ambiguous query. Instead, our current models usually guess what the user intended.

-

While we’ve made efforts to make the model refuse inappropriate requests, it will sometimes respond to harmful instructions or exhibit biased behavior. We’re using the Moderation API to warn or block certain types of unsafe content, but we expect it to have some false negatives and positives for now. We’re eager to collect user feedback to aid our ongoing work to improve this system.

© OpenAI

When ChatGPT fails:

The GPT-3 software is obviously impressive, but that doesn’t mean it is flawless. Through the ChatGPT function, you can see some of its quirks.

This is obviously no surprise considering the impossible task of keeping up with world events as they happen, along with then training the model on this information.

Equally, the model can generate incorrect information, getting answers wrong or misunderstanding what you are trying to ask it.

If you try and get really niche, or add too many factors to a prompt, it can become overwhelmed or ignore parts of a prompt completely.

If you ask it to write about two people, listing their jobs, names, ages, and where they live, the model might confuse these factors, randomly assigning them to the two characters.

ChatGPT is also successful due to a lot of factors. It understands ethics and morality quite well for an AI.

As a result of being given a list of ethical theories or situations, ChatGPT is able to provide a thoughtful response on how to proceed, taking into account legality, people’s feelings and emotions, and everyone’s safety.

In addition, it remembers any rules you’ve set, or information you’ve provided earlier in the conversation.

Two areas the model has proved to be strong are its understanding of code and its ability to compress complicated matters. ChatGPT can make an entire website layout for you, or write an easy-to-understand explanation of dark matter in a few seconds.

Where ethics and artificial intelligence meet

It’s like fish and chips or Batman and Robin when it comes to artificial intelligence and ethical concerns. It is important to keep in mind that when you put technology like this in the hands of the general public, there are many limitations and concerns involved.

Due to the system’s reliance on words from the internet, it can pick up on the internet’s biases, stereotypes, and opinions. In other words, depending on what you ask, you may find jokes or stereotypes about particular groups or political figures.

For example, when asking the system to perform stand-up comedy, it can occasionally throw in jokes about ex-politicians or groups who are often featured in comedy bits.

Equally, the models love of internet forums and articles also gives it access to fake news and conspiracy theories. These can feed into the model’s knowledge, sprinkling in facts or opinions that aren’t exactly full of truth.

In places, OpenAI has put in warnings for your prompts. Ask how to bully someone, and you’ll be told bullying is bad. Ask for a gory story, and the chat system will shut you down. The same goes for requests to teach you how to manipulate people or build dangerous weapons.

Artificially intelligent eco-systems

Artificial intelligence has been in use for years, but it is currently going through a stage of increased interest, driven by developments across the likes of Google, Meta, Microsoft and just about every big name in tech.

However, it is OpenAI which has attracted the most attention recently. The company has now made an AI image generator, a highly intelligent chatbot, and is in the process of developing Point-E – a way to create 3D models with worded prompts.

In creating, training and using these models, OpenAI and its biggest investors have poured billions into these projects. In the long-run, it could easily be a worthwhile investment, setting OpenAI up at the forefront of AI creative tools.

How Microsoft plans to use ChatGPT in future

OpenAI has had a number of big name investors in its rise to fame with names including Elon Musk, Peter Thiel, and LinkedIn co-founder Reid Hoffman. But when it comes to the ChatGPT and its real-life uses, it’s one of OpenAI’s biggest investors that will get to use it first.

Microsoft threw a massive $1 billion investment into OpenAI and now the company is looking to implement ChatGPT into its search engine Bing. Microsoft has been battling to take Google on as a search engine for years now, looking for any feature that can help it stand out.

Last year, Bing held less than 10 per cent of the world’s internet searches. While that sounds tiny, it is more testament to Google’s grip on the market with Bing standing out as one of the most popular options.

With plans to implement ChatGPT into its system, Bing is hoping to better understand users’ queries and offer a more conversational search engine.

It is currently unclear how much Microsoft plans to implement ChatGPT into Bing, however this will likely begin with stages of testing. A full implementation could risk Bing being caught up with GPT-3’s occasional bias which can really delve deep into stereotypes and politically